AI Agents Platform

The Challenge

When our team pivoted to Lindy.ai, we started with what seemed like an obvious idea: build the ultimate AI personal assistant. Something that could manage schedules, write emails, and handle tasks through natural conversation.

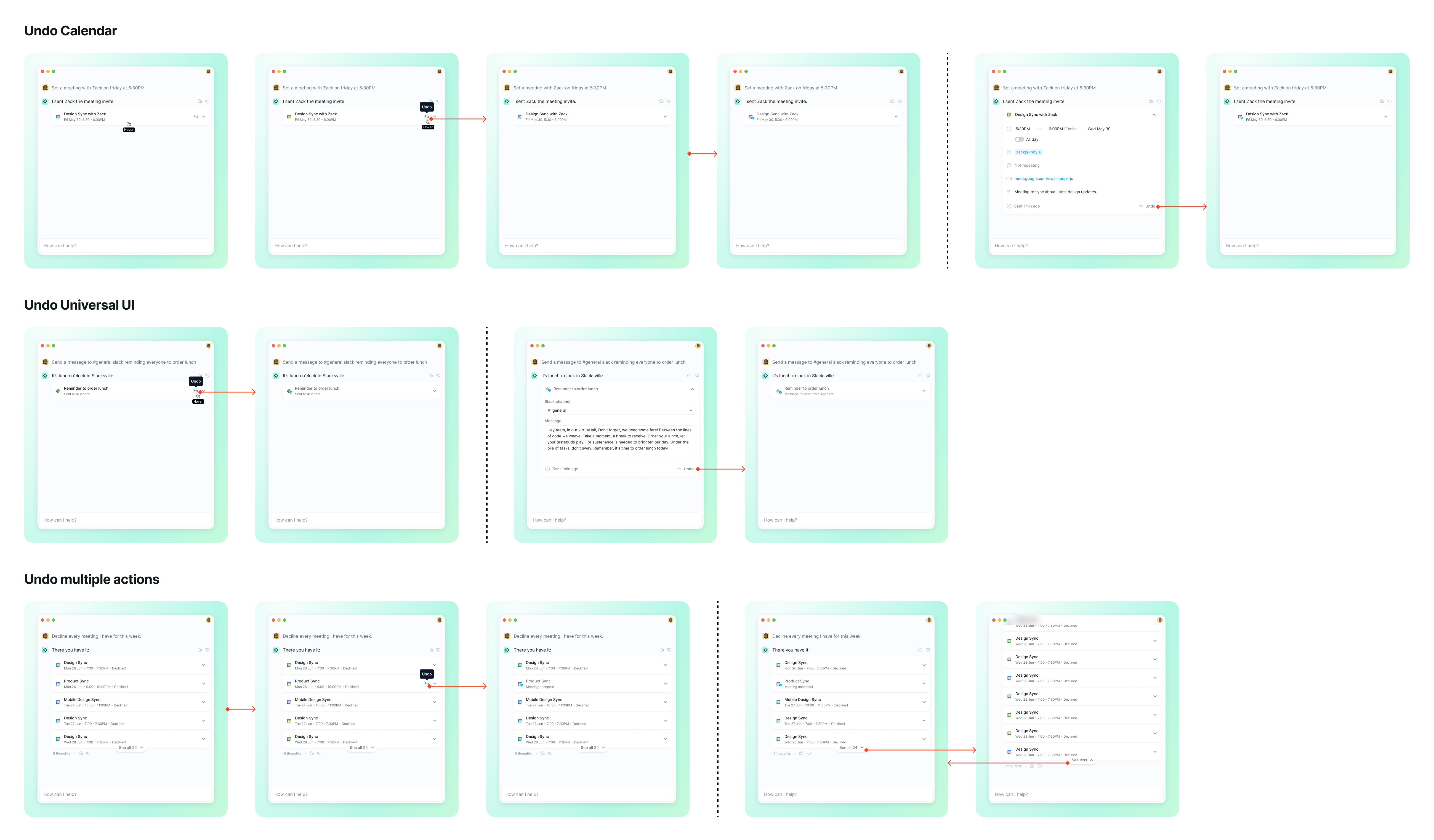

Early design concept as we explored the shift from assistant to platform

But we quickly discovered we were solving the wrong problem.

The market was skeptical of AI tools that acted as black boxes—impressive demos that fell apart in real-world use. But more fundamentally, people didn’t want a one-size-fits-all assistant. They wanted to build their own AI agents tailored to their specific workflows and business needs. The real opportunity wasn’t in building the assistant—it was in building the platform that let anyone create their own.

My Role

As the founding designer, I led all product design efforts from concept through launch. This included user research, interaction design, visual design, and establishing our design system. I worked directly with our engineering team to ship weekly iterations and maintained close contact with users throughout the product development cycle.

Discovery: The Pivot That Changed Everything

Rather than designing in isolation, we made a commitment that shaped everything we built: every Friday, we’d sit with new users during their onboarding experience. No filters, no cherry-picking—just real people trying to use Lindy for the first time.

These Friday sessions didn’t just inform our design decisions—they fundamentally changed our product strategy.

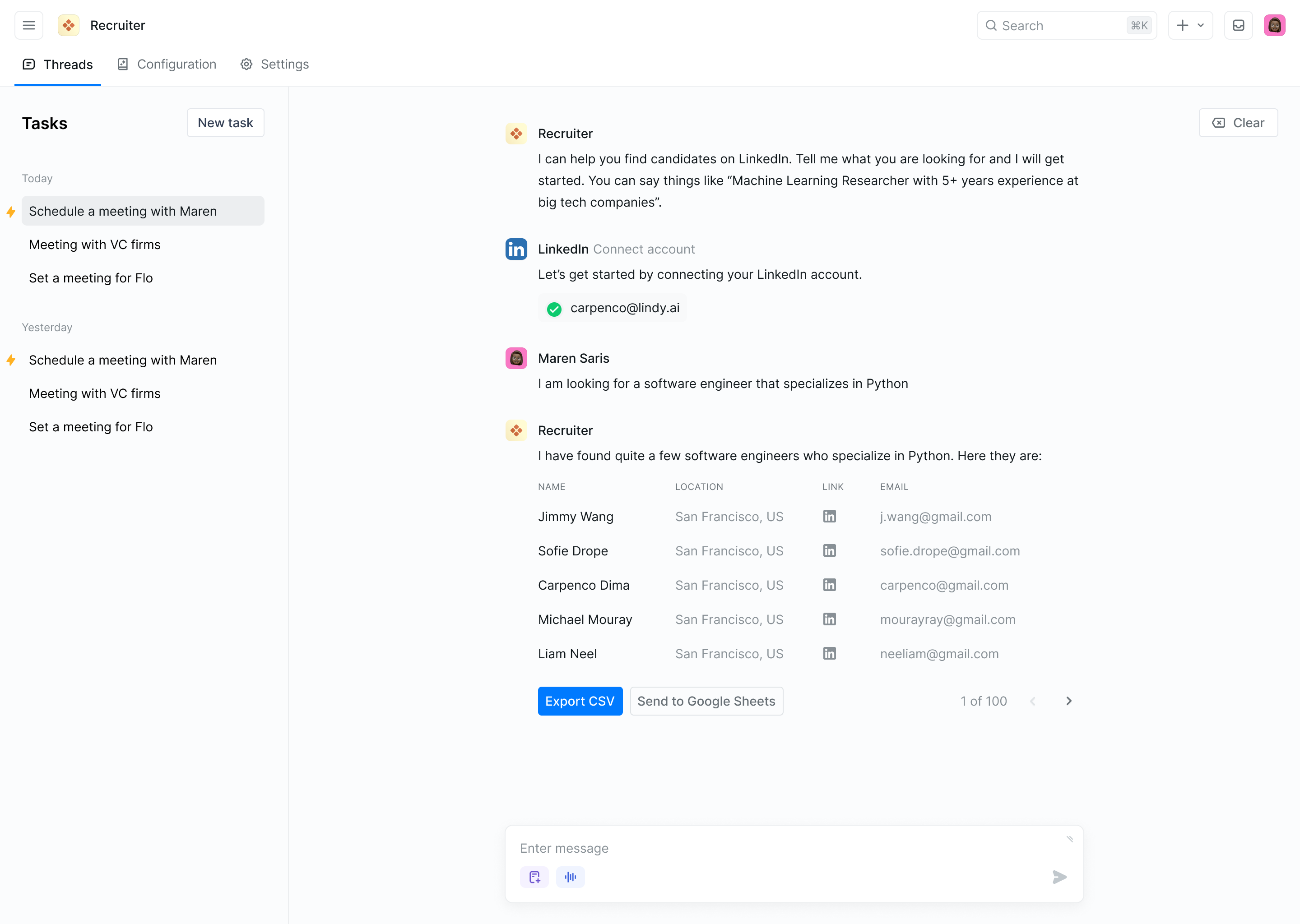

In our early sessions, we kept hearing the same pattern: “This is great, but can I make it do X?” Users weren’t asking for a better assistant—they were asking for the ability to build their own. A sales team wanted an agent that qualified leads. A support team wanted one that triaged tickets. A recruiter wanted one that screened candidates.

The insight was clear: every team had unique workflows that no pre-built assistant could serve. They needed a platform to create custom AI agents, not a single assistant trying to do everything.

This realization reframed our entire design challenge. Instead of building one experience, I now needed to design a system that empowered non-technical users to become AI agent creators.

Design Principles for an Agent Platform

From these Friday sessions, three core principles emerged that shaped every design decision:

1. Make Complexity Optional Users could start with simple, pre-built agent templates and progressively customize as they gained confidence. The platform scaled from “click to deploy” to “build from scratch” without requiring users to master everything upfront.

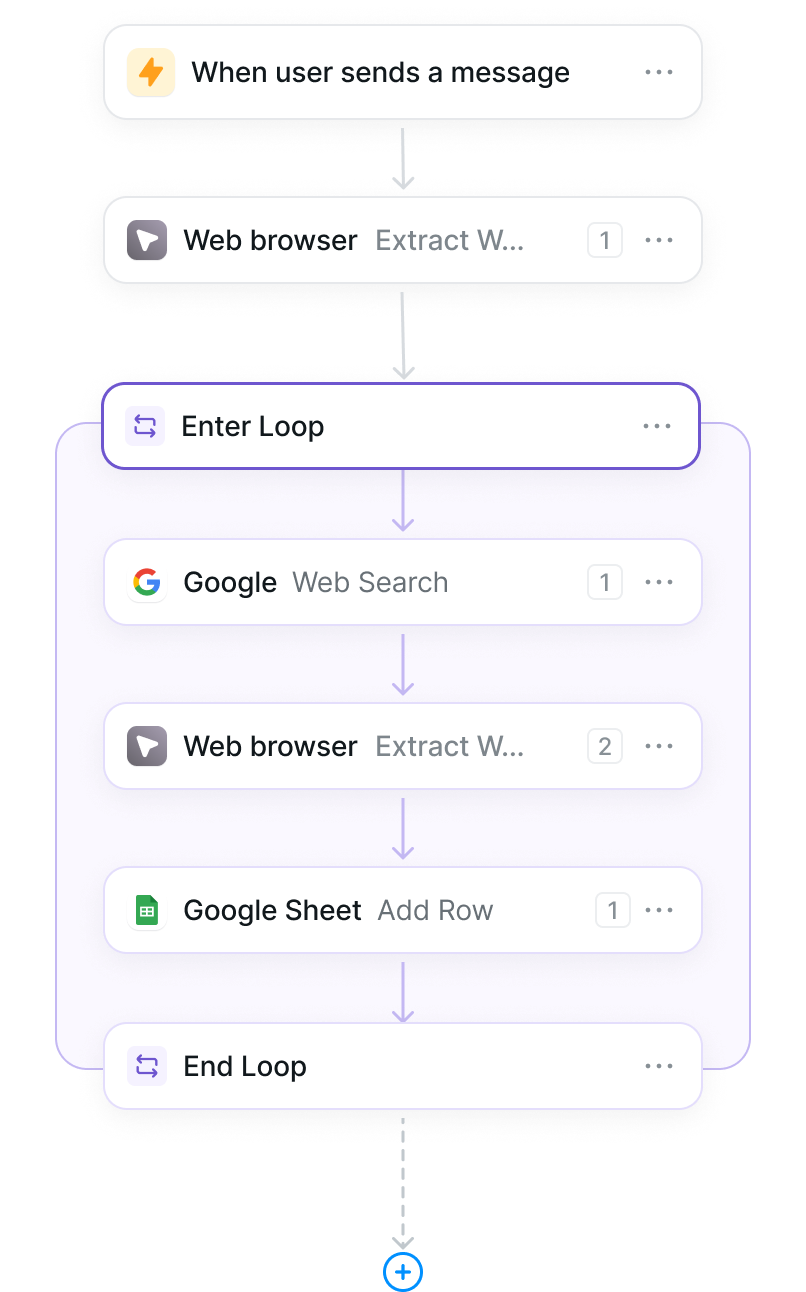

2. Show, Don’t Tell Every agent action—whether it was drafting an email, updating a spreadsheet, or calling an API—was visualized in the workflow editor. Users could see the logic, test it safely, and understand exactly what would happen before deploying.

3. Familiar Patterns, New Capabilities Rather than inventing entirely new paradigms, we borrowed from tools people already knew: flowcharts for logic, chat interfaces for testing, and dashboards for monitoring. This made AI agent creation feel learnable, not alien.

The Design Process: Rapid Iteration in Production

Our process was unconventional. Instead of lengthy design sprints, we embraced extreme iteration:

Weekly Design Cycles: I’d design, we’d ship, and users would react—all within the same week. This meant working in higher fidelity earlier but with the understanding that nothing was precious.

Live Feedback Loops: The Friday onboarding sessions weren’t just research—they directly informed what we built the following week. If three users struggled with the same interaction, it was redesigned by Friday.

Built-in Analytics: We instrumented every interaction to understand where users succeeded and where they dropped off, supplementing qualitative insights with quantitative data.

Final interface design showing workflow automation

This approach meant shipping things that weren’t perfect, but it also meant we never spent weeks building the wrong thing. We learned faster than our competitors because we were in constant contact with reality.

Key Design Solutions

Visual Agent Builder We developed a flow chart editor that let users design their AI agents visually. Instead of writing code or complex prompts, they could drag and drop actions, set conditions, and connect integrations. This made AI agent logic concrete and debuggable.

Testing Playground Before deploying an agent, users could test it in a safe sandbox environment. They could see exactly how it would respond to different inputs, catch errors early, and build confidence in their agent’s behavior.

Template Library Rather than starting from scratch, users could begin with proven agent templates—customer support, lead qualification, data enrichment—and customize them. This dramatically reduced time-to-value and helped users learn the platform through working examples.

Guardrails and Approval Flows For high-stakes actions like sending emails or updating databases, we introduced approval steps that let users verify before agents took action. This single feature dramatically increased trust and unlocked business use cases that required human oversight.

Results and Impact

The pivot to a platform strategy, informed by continuous user research, paid off dramatically:

- $15M in first-year revenue after launch, validating that we’d found true product-market fit by letting users build custom agents for their specific needs

- High user retention driven by trust in the platform—users who created their first agent typically went on to build several more for different workflows

- Reduced onboarding friction from an average of 45 minutes to under 15 minutes through continuous UX refinement based on our Friday sessions

- Strong word-of-mouth growth as users became advocates, showing colleagues the agents they’d built and how easy it was to create their own

- Platform adoption across use cases: From sales teams automating lead qualification to support teams triaging tickets, the platform proved valuable across industries because users could adapt it to their unique workflows

What I Learned

Listen even when it means changing direction: We started building a personal assistant, but our users told us they needed something else. Having the humility to pivot based on that feedback—rather than pushing forward with our original vision—made all the difference. Product-market fit came from listening, not from being right.

Stay close to the new user experience: It’s easy to design for power users once you know the product intimately. Our Friday ritual forced me to remember what it felt like to encounter Lindy for the first time, every single week. This practice kept us honest about complexity and prevented us from building for ourselves.

Ship to learn, especially with AI: Users will interact with your AI product in ways you can’t predict in a design file. The faster you can get real usage data, the faster you can build something truly useful. In AI design, real-world testing isn’t optional—it’s the only way to know what works.

Design systems, not just features: Building a platform meant thinking in systems: templates, patterns, and guardrails that would work across hundreds of different use cases. This required a different design mindset than building a single-purpose tool.

Trust is your product’s foundation: Every interaction either builds or erodes trust. Being intentional about transparency, testing environments, and approval workflows turned trust from a potential blocker into our competitive advantage. Users chose Lindy because they felt safe building with it.

This project fundamentally changed how I approach product design. The best solution isn’t always your first idea—sometimes it’s the one your users tell you they need, if you’re willing to listen and adapt.